2012年の大きな国際的な画像認識コンテストでディープラーニングが圧倒的な性能で1位になって以来、画像認識技術へのディープラーニングへの応用は非常に活発です。私たちステアラボではこの技術を応用して花の種類を判別する人工知能を開発しました。

世界中に存在する花の種類は何万もあるのですが、現在は比較的身近なところで見られる406種類の判定ができます。

具体的な花の種類の一覧はここをご覧ください。

訓練データに使用した花の写真が欧米の写真がほとんどだったため、残念ながら日本固有の花が少ないです。

モデル

認識で用いたディープラーニングのモデルは GoogleNet with Batch Normalization です。コードは Soumith Chintala氏の torchによる実装をほぼそのまま使用しました。

https://github.com/soumith/imagenet-multiGPU.torch

変更したのは最終層のsoftmaxの出力数を406にしただけです。

データセット

花の画像はImageNetの中に種別に集められている花画像をダウンロードして使用しました。たくさんの種類がありますが、画像が十分な数ないものも多いです。私たちが使用したWNIDの一覧はここを参照して下さい。対応する英語名、和名も合わせてリストにしてあります。

ご注意:残念ながらimage-net.orgの花画像には誤ってラベルづけされた画像も少なからずあります。従って、使用する際にはそれらの誤分類されたデータを削除したり、またそれに伴って画像を補充するなどする必要があります。本開発では訓練にあたっては1種類あたり700枚以上の画像を用意しました。

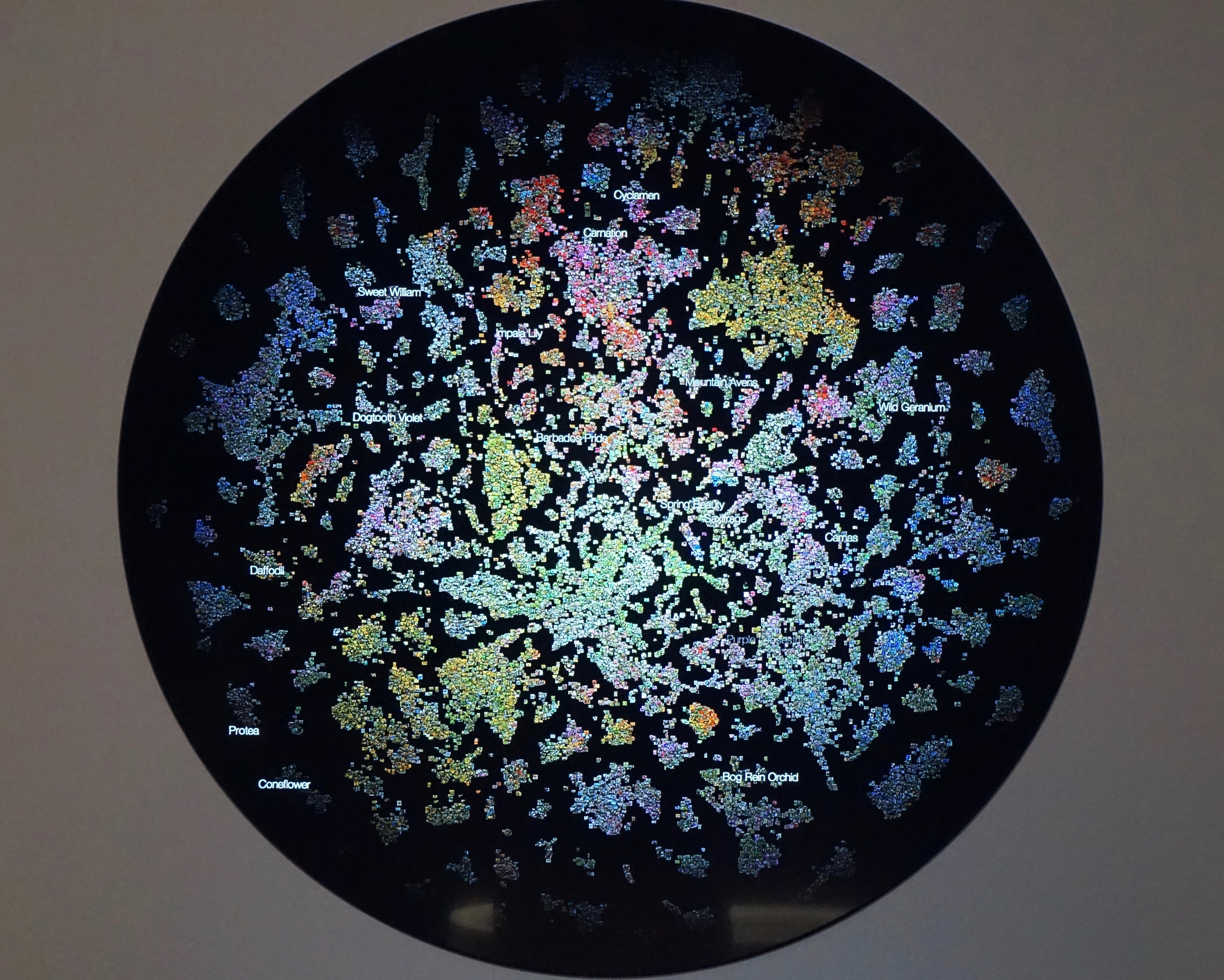

花マップ

各画像はモデルの後段で1000次元のベクトルで表現されています。このベクトルを t-SNE という手法で2次元ベクトルに変換したものが花マップです。

多くの画像が重なるので、実装上は深さ方向に画像をランダムにずらして配置しています。t-SNEのおかげで406のカテゴリがきれいに可視化されています。

他のカテゴリーと峻別できるものは孤立した島として、そうではない島は大きな大陸の一部になったり、島どうしが地続きになっているのが見てとれます。

性能

それぞれの種類ごとに50枚のテスト画像を用意して性能を計測したところ

top1 accuracy = 77.5%

top5 accuracy = 96.5 %

となっています。

Since in LSVRC 2012 Hinton’s group won the competition with his epoch-making deep learning system, SuperVision, applications of deep learning theory to various visual tasks are quite active. We at STAIR Lab. developed an AI engine that can recognize the species of a flower using the technology.

It is said that there are more than hundreds of thousands of species of flowers in the world. Our AI engine can recognize only several hundreds of species out of them. They are flowers mostly found in our neighborhood.

Please see the following for the entire list of recognizable flowers.

Deep Learning Model

The task here is muti-class classification. Especially this task is an example of fine-grained recognition.

The number of classes is 406. This number comes from the availability of photos (see Dataset section below).

The model used is GoogleNet with batch normalization. We used the code written in torch by Dr. Soumith Chintala (https://github.com/soumith/imagenet-multiGPU.torch ). Only the last layer was modified to match with the number of classes, 406 (see below).

We experimented with the model pre-trained using ImageNet dataset, but the result was not so different from the one trained from scratch.

Dataset

Images of flowers are collected from ImageNet. There are many flower nodes in ImageNet, but some don’t have enough number of images. The list of adopted 406 nodes (WNID) is here. For each of 406 nodes, we collected at least 700 images.

Attention: Some images stored in ImageNet are incorrectly labeled. So if you want to use them for your own deep learning experiment, first you need data-cleaning by removing incorrectly labeled images. You may also need to add images to supplement the deleted images. This is what we did. Indeed this is the hardest part of this experiment.

Flower Map

Each image is transformed into a vector of 1,000 dimensions along the deep convolutional neural network. These vectors can be transformed further to two dimensional vectors by means of the well known dimension reduction method, t-SNE. Flower Map is a map that plots these two dimensional vectors. In the map each image is placed on its t-SNE 2D coordinates. In order to avoid too much overlapping, we give a random z-axis coordinate for each image. So in practice, Flower Map is a 3D map. You can see that a well-trained category looks like an isolated island. Some islands are getting together to form a large continent, which implies those categories are difficult to distinguish one another.

Performance

Using the test dataset that has 50 images for each nodes, the model achieve the following.

top1 accuracy = 77.5%

top5 accuracy = 96.5%